World

Act MP Withdraws Bill to Criminalise Explicit Deepfake Images

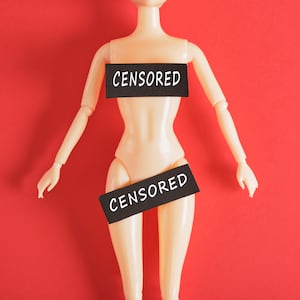

New Zealand’s Act Party member of parliament, Laura McClure, has officially withdrawn her proposed legislation aimed at criminalising sexually explicit “deepfake” images. The Deepfake Digital Harm and Exploitation Bill, which was intended to amend existing laws, aimed to broaden the definition of an “intimate visual recording” to include images or videos that depict a person’s likeness in intimate contexts without their consent.

This legislative move was prompted by McClure’s discussions with victims of deepfake abuse, who have reported severe personal and professional impacts due to such violations. “Since I lodged my bill, I’ve heard from victims who’ve had their lives derailed by deepfake abuse,” she stated, highlighting the urgent need for legal protection against this form of digital exploitation.

Details of the Proposed Legislation

The bill sought to expand the legal framework surrounding intimate recordings, recognising the necessity to address the technological advancements in image manipulation. By including synthesized or altered images, the legislation aimed to provide a comprehensive approach to protecting individuals from non-consensual use of their likeness in explicit contexts.

McClure’s initiative was seen as a crucial step towards safeguarding personal rights in an era increasingly dominated by digital media. The bill’s withdrawal is a setback for advocates who argue that legislation is essential to combat the growing prevalence of deepfakes and their impact on privacy and consent.

While the reasons for the withdrawal have not been fully disclosed, it raises questions about the complexities of legislating technology that evolves rapidly. This development might indicate the need for further discussion and refinement of the proposed measures to ensure they effectively address the challenges posed by deepfake technology.

The Broader Context of Deepfake Technology

Deepfake technology, which uses artificial intelligence to create realistic-looking fake videos and images, has raised significant concerns regarding consent, privacy, and misinformation. The ability to manipulate visual content poses risks not only to individual reputations but also to societal trust in media.

Legislators and policymakers worldwide are grappling with how to regulate this emerging technology. Some countries have already begun implementing laws aimed at addressing the misuse of deepfake content, while others are still in the early stages of developing legal frameworks.

McClure’s bill was part of a broader movement to adapt legal standards to modern technological realities, aiming to protect individuals from the potential harms associated with deepfakes. Moving forward, discussions surrounding the implications of deepfake technology will likely continue, as both lawmakers and the public seek to navigate the evolving digital landscape.

-

World4 months ago

World4 months agoTest Your Knowledge: Take the Herald’s Afternoon Quiz Today

-

Sports4 months ago

Sports4 months agoPM Faces Backlash from Fans During Netball Trophy Ceremony

-

Lifestyle4 months ago

Lifestyle4 months agoDunedin Designers Win Top Award at Hokonui Fashion Event

-

Entertainment5 months ago

Entertainment5 months agoExperience the Excitement of ‘Chief of War’ in Oʻahu

-

Sports4 months ago

Sports4 months agoLiam Lawson Launches New Era for Racing Bulls with Strong Start

-

World5 months ago

World5 months agoCoalition Forms to Preserve Māori Wards in Hawke’s Bay

-

Health4 months ago

Health4 months agoWalking Faster Offers Major Health Benefits for Older Adults

-

Lifestyle4 months ago

Lifestyle4 months agoDisney Fan Reveals Dress Code Tips for Park Visitors

-

Politics4 months ago

Politics4 months agoScots Rally with Humor and Music to Protest Trump’s Visit

-

Top Stories5 months ago

Top Stories5 months agoUK and India Finalize Trade Deal to Boost Economic Ties

-

Health2 months ago

Health2 months agoRadio Host Jay-Jay Feeney’s Partner Secures Visa to Stay in NZ

-

World5 months ago

World5 months agoHuntly Begins Water Pipe Flushing to Resolve Brown Water Issue