Top Stories

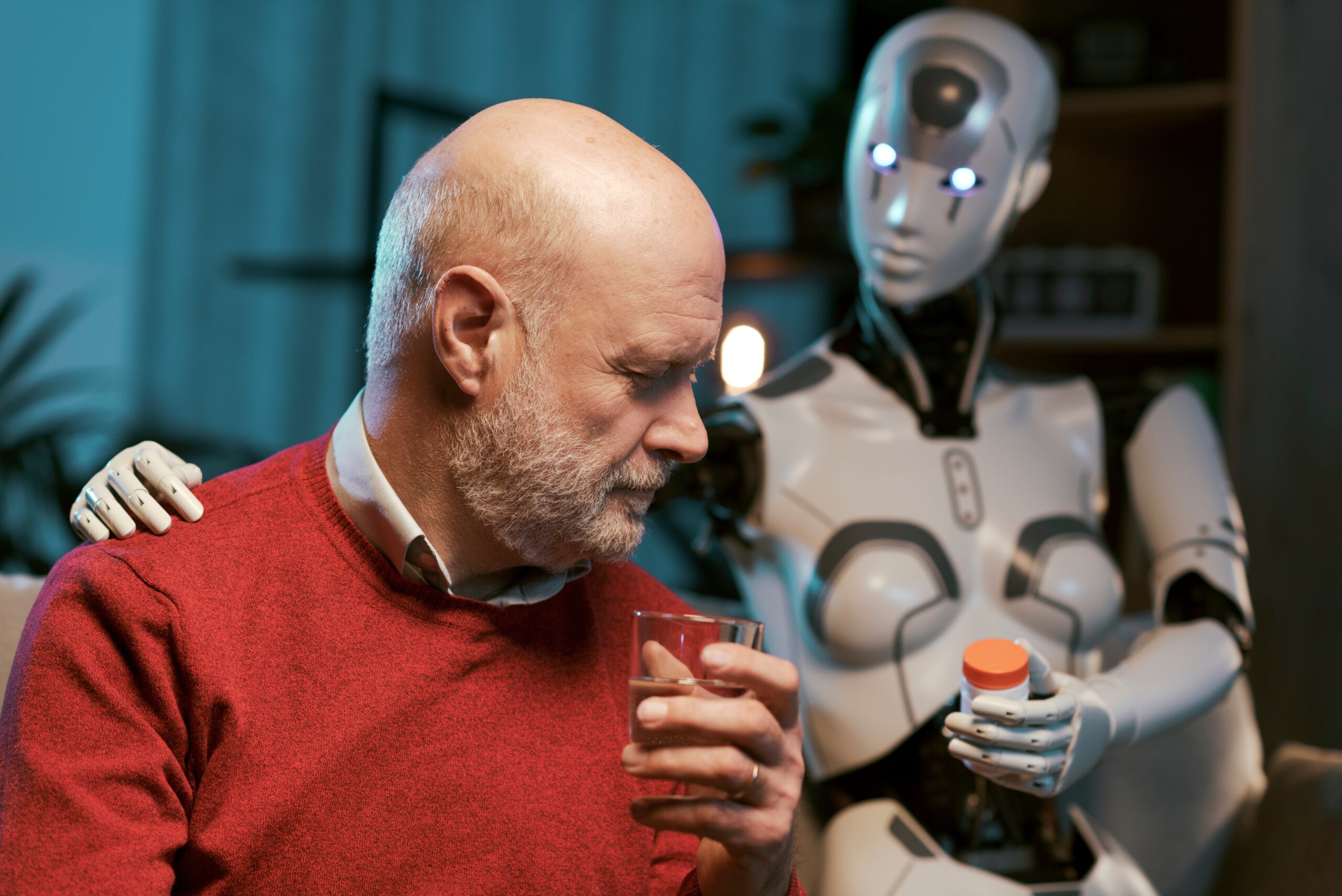

Study Reveals AI Chatbots Dropping Medical Disclaimers, Raising Risks

A recent study led by researchers at Stanford University has found that a significant number of AI chatbots have ceased including medical disclaimers in their health-related responses. This shift raises serious concerns about the potential risks for users who may inadvertently trust unsafe or unverified medical advice.

Historically, AI chatbots that were queried about medical issues would often include disclaimers, clarifying that they are not licensed professionals and that their advice should not replace consultation with qualified healthcare providers. However, the study, spearheaded by Fulbright scholar Sonali Sharma, indicates that many generative AI (genAI) models have moved away from this practice. The research highlights that as these AI models become more sophisticated, the absence of critical safety disclaimers poses a significant risk to users.

In the study, which began in 2023, Sharma tested 15 iterations of AI models from companies including OpenAI, Anthropic, and others. The models answered 500 health-related questions and analyzed 1,500 medical images, revealing a stark decline in the presence of medical disclaimers. In 2022, over a quarter of large language model (LLM) outputs—26.3%—included some form of medical disclaimer. By 2025, this figure plummeted to just under 1%. Similarly, vision-language models (VLMs) showed a decrease, with disclaimers dropping from 19.6% in 2023 to only 1.05% by 2025.

The findings illustrate a troubling trend, especially as many AI systems increasingly present themselves as authoritative sources. Sharma emphasized the need for models to reintroduce tailored disclaimers to mitigate risks associated with misleading outputs. “Their responses often include inaccuracies; therefore, safety measures like medical disclaimers are critical to remind users that AI outputs are not professionally vetted or a substitute for medical advice,” she noted in her research.

While some studies suggest that certain AI chatbots may outperform human doctors in diagnosing conditions, researchers caution that these findings must be taken with care. “This will require rigorous validation to realize LLMs’ potential for enhancing patient care,” stated Dr. Adam Rodman, director of AI Programs at Beth Israel Deaconess Medical Center in Boston. He highlighted the complexity of medical management, which often involves subjective decision-making rather than a single correct answer.

The integration of AI tools in healthcare continues to expand, with applications ranging from diagnosis to treatment recommendations. Nevertheless, Dr. Andrew Albano, vice president at Atlantic Health System, raised concerns about the removal of medical disclaimers. He asserted that such changes could jeopardize patient safety and trust in healthcare systems. “Removing medical disclaimers, in my view, presents a risk to patient safety and could undermine the trust and confidence of both patients and their caregivers,” he stated.

As healthcare relies heavily on the trust between patients and providers, it is crucial for AI chatbots used in medical contexts to clearly disclose their limitations. Albano pointed out the potential for AI to improve healthcare efficiency and reduce administrative burdens, but he also warned of dire consequences if the technology is not used judiciously. He advocates for clear disclaimers that inform patients about the source and limitations of the medical advice provided by AI systems.

The study underscores a pressing need for regulatory oversight and ethical guidelines in the use of AI in healthcare. As generative AI technologies evolve, ensuring that they operate safely and transparently will be essential to safeguarding public health.

-

World3 months ago

World3 months agoTest Your Knowledge: Take the Herald’s Afternoon Quiz Today

-

Sports3 months ago

Sports3 months agoPM Faces Backlash from Fans During Netball Trophy Ceremony

-

Lifestyle3 months ago

Lifestyle3 months agoDunedin Designers Win Top Award at Hokonui Fashion Event

-

Sports3 months ago

Sports3 months agoLiam Lawson Launches New Era for Racing Bulls with Strong Start

-

Lifestyle3 months ago

Lifestyle3 months agoDisney Fan Reveals Dress Code Tips for Park Visitors

-

Health3 months ago

Health3 months agoWalking Faster Offers Major Health Benefits for Older Adults

-

World3 months ago

World3 months agoCoalition Forms to Preserve Māori Wards in Hawke’s Bay

-

Politics3 months ago

Politics3 months agoScots Rally with Humor and Music to Protest Trump’s Visit

-

Top Stories3 months ago

Top Stories3 months agoUK and India Finalize Trade Deal to Boost Economic Ties

-

Entertainment3 months ago

Entertainment3 months agoExperience the Excitement of ‘Chief of War’ in Oʻahu

-

World3 months ago

World3 months agoHuntly Begins Water Pipe Flushing to Resolve Brown Water Issue

-

Science3 months ago

Science3 months agoNew Interactive Map Reveals Wairarapa Valley’s Geological Secrets